- #Azure data storage explorer how to

- #Azure data storage explorer install

- #Azure data storage explorer iso

- #Azure data storage explorer windows

#Azure data storage explorer how to

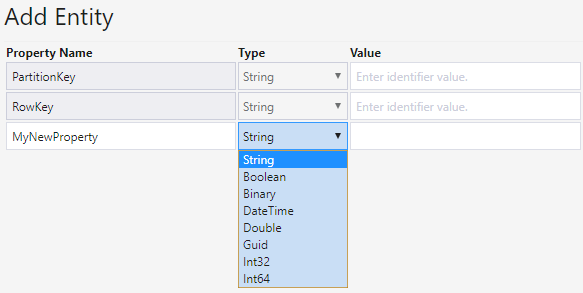

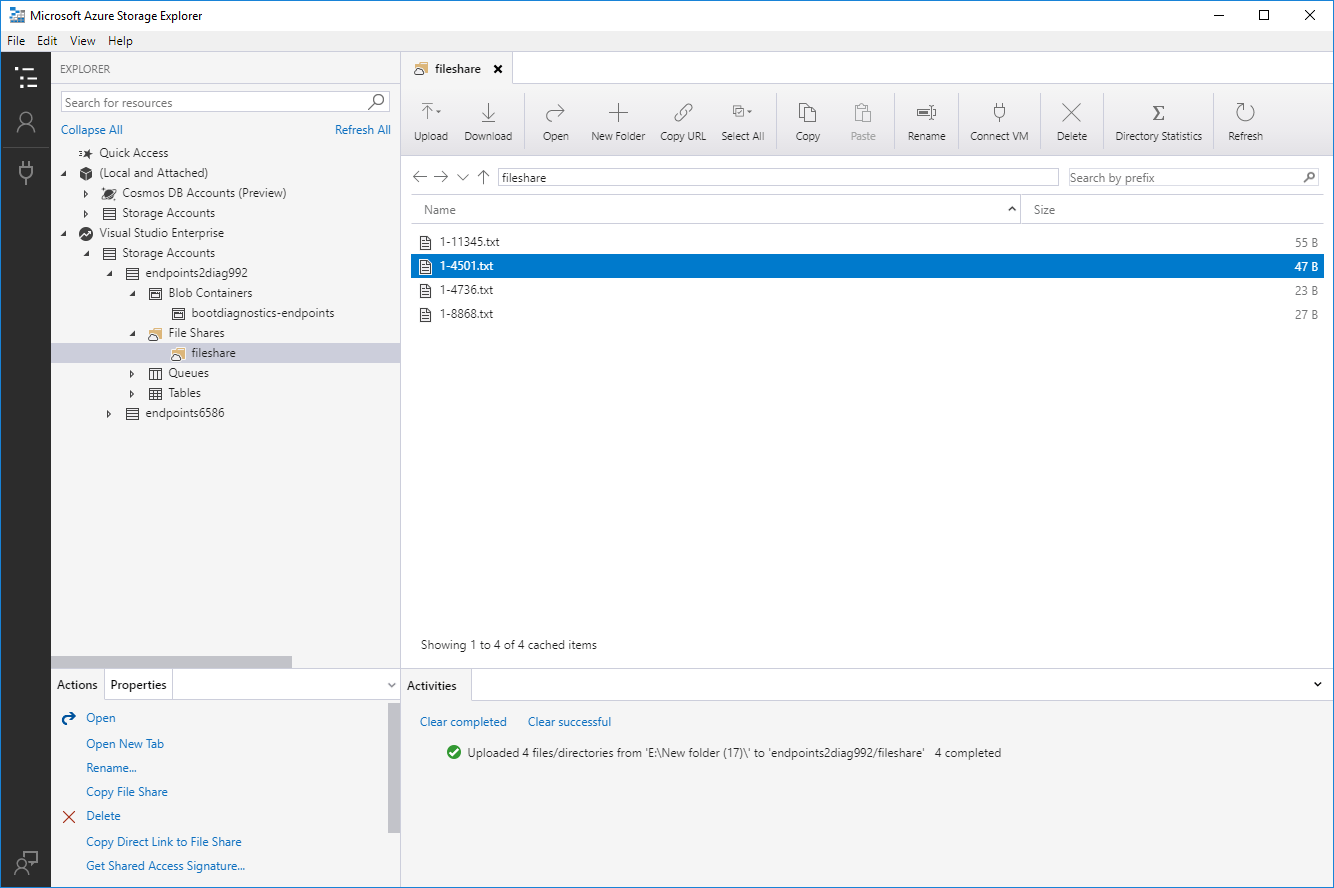

This step-by-step guide explains how to create Azure Blob Storage custom field extension for your content types in Contentstack. Thus, while creating entries, you can select any data (as mentioned above) as the input value for the field in the content type of your stack. You can also verify the data on Azure Portal.Ĭreative Commons Attribution-ShareAlike 4.Azure Blob Storage custom field extension allows you to fetch your data (documents, images, videos, etc) from your Azure Blob Storage account and display them into a field of a content type in your stack. In my example, I only need to change RequestTimeUtc to DateTime type.Ĭlick Insert, and then your data will be imported to Azure Storage Table. Select the CSV file just exported, check and change the data type if necessary for each field. Start Azure Storage Explorer, open the target table which the data would be imported into, and click Import on the toolbar. The only difference is to select "Copy data from one or more tables or views" in "Specify Table Copy or Query" step. The data export method for View is almost the same as before.

#Azure data storage explorer windows

Set row delimiter matches Windows style, and column delimiter as comma.Īnother method is to create a View, still using the same SQL statement.

Then choose Destination as Flat File Destination and specify a CSV path as the destination.Ĭhoose "Write a query to specify the data to transfer" Select the data source as SQL Server Native Client and connect to your own database. Right-click on the database and select Tasks-Export Data We can still use the SQL we just wrote to export the data, but this time it will no longer be exported from the results grid (although SSMS supports this, but it also faces the data amount limit). The classic old but not obsolete SSMS provides a special data export wizard that supports the CSV format. So there are the other two methods for exporting data. If you encounter a large amount of data in an enterprise scenario, it will definitely blow up. It not only limits the number of entries, but also the number of characters displayed in the column. The result grid has a limit on the amount of data. If the amount of data in your table is not very large, you can use Azure Data Studio, a cross-platform tool to complete the export operation.Īfter executing the SQL statement in Azure Data Studio, click the Export to CSV button in the toolbar to the right of the result grid to save the result as a CSV file with column names.īut this method has disadvantages. Now, with our SQL in place, we are ready to export our data to CSV, there are three ways to do it. In my example, I don't have these two, so the final SQL for me is: SELECTĬONVERT(char(30), lt.RequestTimeUtc,126) AS RequestTimeUtc WHEN l.AkaName IS NOT NULL THEN l.AkaName CASEįor NULL values in string type, we must use an empty string or you will end up with string value "NULL". If your table has bit representing bool values, we can use a CASE WHEN statement.

#Azure data storage explorer iso

Luckily, in T-SQL we an convert a DateTime to ISO 8601 by: CONVERT(char(30), ,126) Then we need to format certain data types like the DateTime, it must be converted to ISO 8601 format. You can get PartitionKey and RowKey through a simple SELECT statement: SELECT As for PartitionKey, the original table does not have that, we can create a fixed string, such as'LT996'. My LinkTracking table uses a GUID-type Id as the primary key that can convert to RowKey. Therefore, the first thing we have to deal with is the primary key. The SQL Server tables often use one or more columns as the primary key, and there is no fixed name constraints. Taking the export of single table data as an example, Azure Storage Table requires two necessary fields: PartitionKey, RowKey. But we have to do some processing on the data before it can be used. We need to export the data in the SQL Server table to CSV format in order to import into Azure Storage Table.

#Azure data storage explorer install

Of course you need an Azure Storage Account, create an empty table in it to import data, for example: LinkTrackingĭownload and install Microsoft Azure Storage Explorerĭownload and install SQL Server Management Studio (Windows only) or Azure Data Studio (cross-platform) Export Data Before proceeding, please research and make sure your business is really suitable for the Azure Storage Table (or Cosmos Table). I still managed to do it within mouse clicks.īut the first thing to warn everyone is that relational databases like SQL Server are not the same as the NoSQL service provided by Azure. You may now consider writing a tool to import data, but actually it isn't necessary. However, neither SSMS nor Azure Portal provide a direct import function. Recently, there was a demand to switch the data storage from SQL Server database to Azure Storage Table.

0 kommentar(er)

0 kommentar(er)